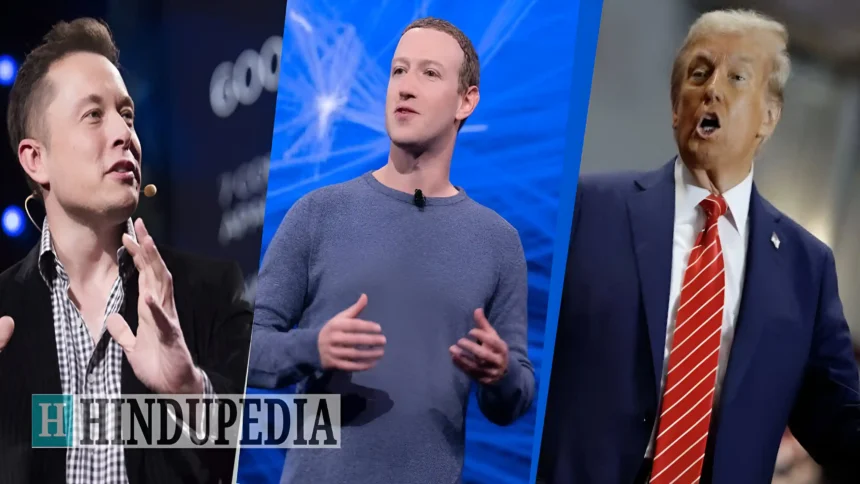

In a significant policy shift, Meta Platforms, the parent company of Facebook and Instagram, has announced the termination of its third-party fact-checking program in the United States. This move replaces independent fact-checkers with a community-driven moderation system known as “Community Notes,” inspired by a similar feature on Elon Musk’s platform, X. The decision has ignited a multifaceted debate, raising questions about the implications for misinformation, political influence, and the future of content moderation on social media platforms.

Background of Meta’s Fact-Checking Program

Meta’s third-party fact-checking initiative was launched in 2016 as a response to the proliferation of misinformation during the U.S. presidential election. The program enlisted independent organizations to evaluate the accuracy of content shared on its platforms, aiming to curb the spread of false information. Over the years, this initiative expanded globally, partnering with numerous fact-checking entities to enhance the reliability of information disseminated on Facebook and Instagram.

The Shift to Community Notes

Mark Zuckerberg, CEO of Meta, has articulated that the transition to Community Notes is intended to promote free expression and reduce perceived censorship. He contends that the previous fact-checking measures led to excessive censorship and were tainted by political bias, thereby diminishing user trust. The Community Notes system empowers users to add context to posts, enabling a more democratic approach to content moderation. This model mirrors the system employed by X, where community members collaboratively determine when posts are potentially misleading and provide additional context.

Political Implications and Timing

The timing of this policy change has raised questions about potential political motivations. The announcement coincides with the inauguration of President-elect Donald Trump, leading to speculation that Meta’s decision may be an effort to align with the new administration. Critics argue that this move could be perceived as an attempt to curry favor with political powers, especially considering Meta’s ongoing antitrust challenges with the Federal Trade Commission (FTC).

Relocation of Content Moderation Teams

In addition to policy changes, Meta plans to relocate its content moderation teams from California to Texas. This strategic move is aimed at addressing perceived cultural biases associated with Silicon Valley and fostering a more diverse perspective in content moderation. By situating these teams in a different region, Meta hopes to mitigate accusations of political bias and enhance the objectivity of its moderation efforts.

Expert Opinions and Criticisms

The decision to eliminate independent fact-checkers has elicited a spectrum of reactions from experts and advocacy groups. Angie Drobnic Holan, a representative of the International Fact-Checking Network, criticized the new approach, warning that it might inundate users with false information. She expressed concerns that the Community Notes system may not effectively curb misinformation and could lead to increased dissemination of disinformation and hate speech on Meta’s platforms.

Furthermore, critics argue that the move could facilitate the spread of misinformation and extremism. The Simon Wiesenthal Center and former Secretary of Labor Robert Reich have condemned the decision, stressing the risk of increased online hate and division. They emphasize the importance of Meta’s responsibility in combating disinformation to prevent societal impact.

Support for the New Approach

Conversely, some view the shift to Community Notes as a positive step toward decentralizing information control and reducing the influence of legacy media. Right-leaning content creators perceive the changes as an opportunity to promote free speech and diminish perceived censorship. They argue that empowering users to moderate content fosters a more open and democratic platform, aligning with principles of free expression.

Implications for Misinformation and User Experience

The transition to a community-driven moderation system raises concerns about the efficacy of combating misinformation. Experts caution that relying on user-generated notes may not provide the same level of scrutiny and accuracy as professional fact-checkers. There is apprehension that this approach could lead to the proliferation of false information, impacting public discourse and potentially causing real-world harm. Additionally, the effectiveness of Community Notes in different cultural and political contexts remains uncertain, posing challenges for global implementation.

Global Perspectives and Regulatory Challenges

Meta’s policy shift underscores emerging global tensions regarding online speech regulation. In Europe, stricter digital regulations, such as the Digital Services Act (DSA), mandate timely removal of illegal content and emphasize risk management to protect fundamental rights. Meta’s move away from third-party fact-checking to a crowd-sourced model may conflict with these regulations, potentially prompting stricter enforcement by European authorities. This geopolitical divide on digital regulation exemplifies differing U.S. and European stances, with significant implications for large tech firms’ operations and content policies.

Conclusion

Meta’s decision to remove independent fact-checkers in favor of a community-driven moderation system represents a pivotal moment in the evolution of content moderation on social media platforms. While the move is framed as an effort to promote free expression and reduce censorship, it has sparked a complex debate encompassing political implications, effectiveness in combating misinformation, and alignment with global regulatory standards. As Meta implements these changes, the impact on user experience, information integrity, and societal discourse will be closely scrutinized by stakeholders across the spectrum.